It probably won’t surprise you that I’m not just working on robotics, I’m working on a few different kinds of automation. I was always a bit bearish on AI, but sometime around GPT3 I started to realize that these things solve a lot of problems that have traditionally required a human.

A few years ago, I spent a lot of staff time and money putting together ‘The Bug Patrol’, this little software testing service that I was hopeful could turn into a new line of revenue for my company. Unfortunately, when we actually put it in front of customers, it was just too expensive. We needed human beings to run tests, because they had to actually recognize what was on screen and take appropriate action.

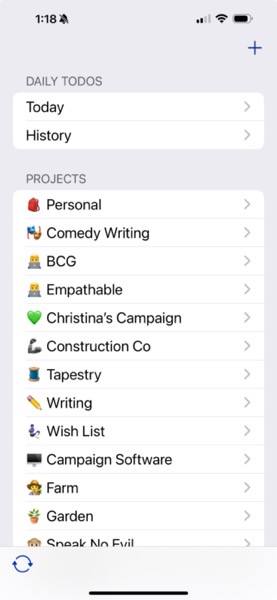

But GPT4 is doing a bang up job. Here’s a screenshot of my todo list app:

When I ask ChatGPT 4o the following query:

List the elements you see on this mobile app screenshot. Then list all the elements that you believe should be tappable.

Here’s the result I get back:

Elements on the Mobile App Screenshot:

Daily Todos Section:

Today

History

Projects Section:

Personal

Comedy Writing

BCG

Empathable

Christina’s Campaign

Construction Co

Tapestry

Writing

Wish List

Campaign Software

Farm

Garden

Speak No Evil

Elements that Should be Tappable:

Daily Todos Section:

Today

History

Projects Section:

Personal

Comedy Writing

BCG

Empathable

Christina’s Campaign

Construction Co

Tapestry

Writing

Wish List

Campaign Software

Farm

Garden

Speak No Evil

Other Interface Elements:

The plus (+) button at the top right corner of the screen (likely to add a new item).

That’s pretty darn good, even though it did miss the ‘sync’ button at the bottom of the screen.

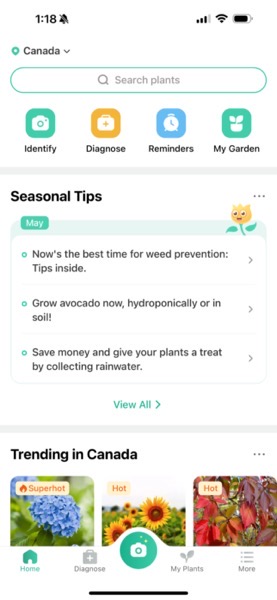

Let’s try again with another screenshot. This is an app called “PictureThis” that I use to recognize plants in my garden.

Again, I ask ChatGPT 4o the following query:

List the elements you see on this mobile app screenshot. Then list all the elements that you believe should be tappable.

And it returns:

Elements on the Mobile App Screenshot:

Location:

Canada (with a dropdown arrow)

Search Bar:

Search plants

Navigation Icons:

Identify (camera icon)

Diagnose (first aid icon)

Reminders (clock icon)

My Garden (plant icon)

Section: Seasonal Tips

May (tag)

Tips:

Now’s the best time for weed prevention: Tips inside.

Grow avocado now, hydroponically or in soil!

Save money and give your plants a treat by collecting rainwater.

View All (link)

Section: Trending in Canada

Superhot (label on a blue flower image)

Hot (label on a sunflower image)

Hot (label on a red leaves image)

Bottom Navigation Bar:

Home (home icon)

Diagnose (first aid icon)

My Plants (plant icon)

More (menu icon)

Elements that Should be Tappable:

Location:

Canada (with a dropdown arrow)

Search Bar:

Search plants

Navigation Icons:

Identify

Diagnose

Reminders

My Garden

Section: Seasonal Tips

Now’s the best time for weed prevention: Tips inside.

Grow avocado now, hydroponically or in soil!

Save money and give your plants a treat by collecting rainwater.

View All

Section: Trending in Canada

Superhot (image)

Hot (sunflower image)

Hot (red leaves image)

Bottom Navigation Bar:

Home

Diagnose

My Plants

More

It missed the camera icon at the bottom, but given an incredibly unoptimized query, that’s a great set of results.

The long and the short of this is that we might be able to use AI image recognition to help map out apps and create better automated testing. I’m working on implementing some Bluetooth code to mimic keyboard and mouse events, just so I can see how far it can go.